Research

Our Research

Our research can be applied to various complex domains–e.g. healthcare, aviation, military operations, and automotive industry–which are becoming increasingly complex with the advent and adoption of new technologies. It is critical to consider cognitive ergonomics and systems engineering to support the design of interfaces that can present the "right information to the user at the right time." We currently have an ongoing research on the following topics:

Multimodal Displays

One of the main focuses of this lab is how to address visual data overload in various data-rich environments. One promising means of addressing this challenge is the introduction of multimodal interfaces, i.e. interfaces that distribute information across different sensory channels which include vision, audition, and touch (with a focus on the latter). The broad research goals are to identify what types of information is best presented with each sensory channel and under what contexts.

Adaptive Displays

The operator demands and subsequently the information needed by the operators is always changing in complex environments. The goal here is to develop interfaces that adjust the nature of information presentation in response to various sensed parameters and conditions. For instance, taking into account user preference, task demands, and environmental conditions.

Cognitive Limitations

The design of displays will be compromised if their design does not take into account the limits of human perception and cognition. For example, one phenomena of interest is change blindness, i.e. the surprising difficulty people have in detecting even large changes in a visual scene or display when the change occurs with another visual event.

Ongoing Projects

CAREER: Collaboratively Perceiving, Comprehending, and Projecting into the Future: Supporting Team Situational Awareness with Adaptive Multimodal Displays (Funded by NSF)

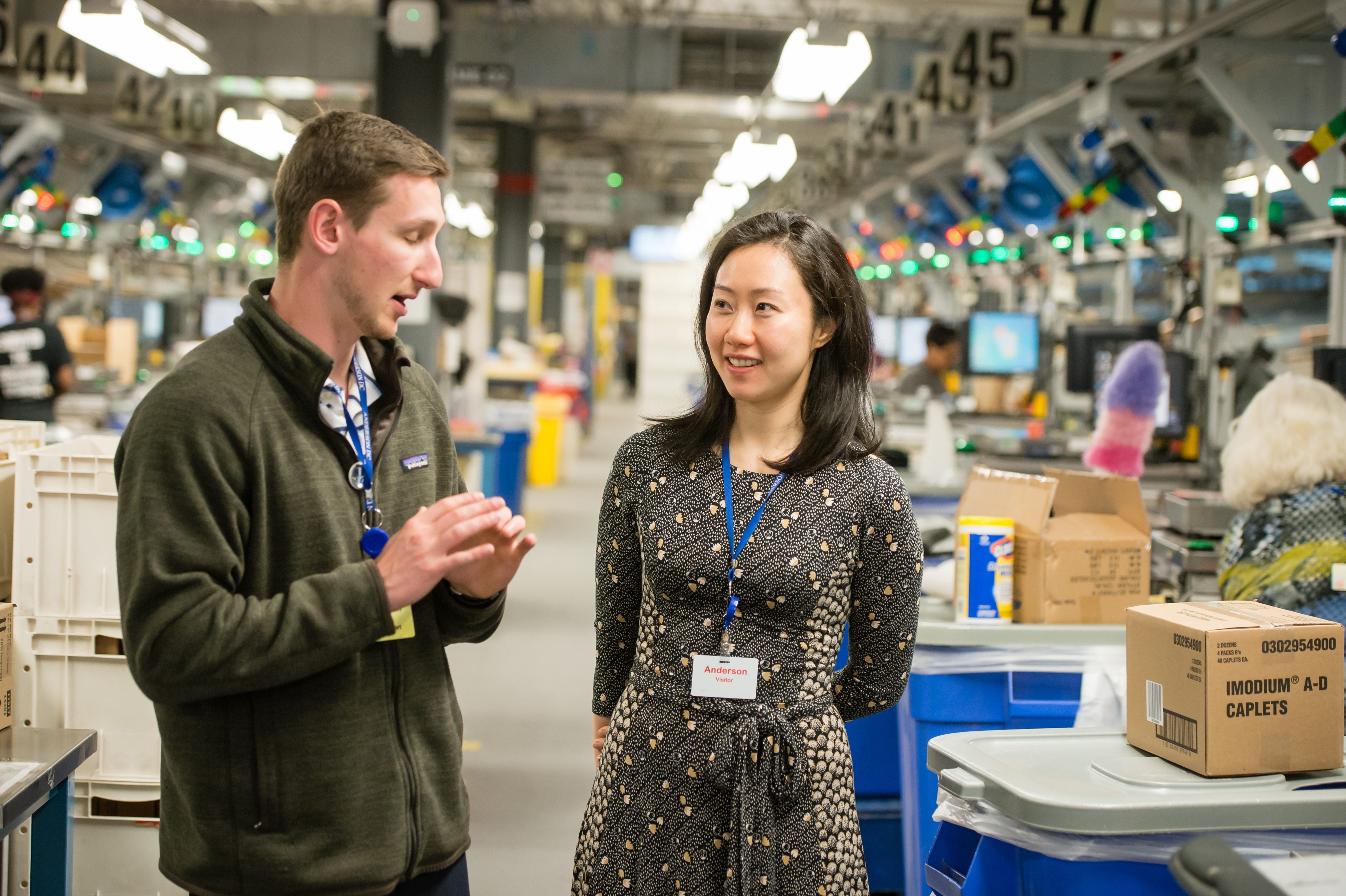

Especially in data-rich and rapidly changing environments, effective teams need to give members the information needed to develop awareness of their own, their teammates', and the overall team's current situation. However, attentional demands are high on such teams, raising questions of how to both monitor those attentional demands and develop systems that adaptively provide needed information not just through visual displays that are often overloaded, but through other senses including touch and sound. Most existing work on adaptive multimodal interfaces for situational awareness focuses on individuals; this project will address how to do this work for teams, using unmanned aerial vehicle (UAV) search and rescue as its primary domain. This includes developing conceptual models that connect individual and team-level situational awareness, algorithms that use eye gaze data to assess both situational awareness and workload in real-time, and multimodal display guidelines that adaptively present information to the most appropriate team members through the most effective modes. This work will fundamentally advance research on understanding and designing to support team interaction, leading to practical improvements in a variety of safety-critical domains. The project also has a significant educational component, providing research opportunities for both graduate and undergraduate students and conducting design activities aimed at outreach and broadening participation in STEM disciplines, including workstation design to support teams of people with disabilities in manufacturing contexts. (Link)

Previous Projects

CRII: CHS: Collaboratively Perceiving, Comprehending, and Projecting into the Future: Supporting Team Situational Awareness with Adaptive Collaborative Tactons (Funded by NSF)

Data overload, especially in the visual channel, and associated breakdowns in monitoring already represent a major challenge in data-rich environments. One promising means of overcoming data overload is through the introduction of multimodal displays which distribute information across various sensory channels (including vision, audition, and touch). In recent years, touch has received more attention as a means to offload the overburdened visual and auditory channels, but much remains to be discovered in this modality. Tactons, or tactile icons/displays, are structured, abstract messages that can be used to communicate information in the absence of vision. However, the effectiveness of tactons may be compromised if their design does not take into account that complex systems depend on the coordinated activities of a team. The PI's goal in this project is to establish a research program that will explore adaptive collaborative tactons as a means to support situational awareness, that is the ability of a team to perceive and comprehend information from the environment and predict future events in real time. Project outcomes will contribute to a deeper understanding of perception and attention between and across sensory channels for individuals and teams, and to how multimodal interfaces can support teamwork in data-rich domains. (Link)

Realizing Improved Patient Care through Human-centered Design in the OR (Funded by AHRQ)

The incidence of adverse events such as surgical site infections and surgical errors are a huge problem in the operating room (OR) due to the high vulnerability of the patient and the complex interactions required between providers of different disciplines and a range of equipment, technology and the physical space where care is provided. Two to five percent of all patients who undergo an operation will develop a surgical site infection leading to significant mortality and morbidity. Distractions and interruptions are major causes of medical errors during surgery and often lead to serious patient harm. While significant efforts to improve patient safety have been focused on enhancing skills and training for surgical staff, little effort has been directed at the environment in which healthcare provider work. The overreaching goal of the proposed 'Realizing Improved Patient Care through Human-Centered Design in the OR' (RIPCHD.OR) learning lab is to develop an evidence-based framework and methodology for the design and operation of a general surgical operating room to improve safety. RIPCHD.OR will use a multidisciplinary human-centered approach incorporating evidence based design, human factors and systems engineering principles. (Link)

A grid computing laboratory for integrative behavioral and optimization research (Funded by AFOSR)

The IE department currently has ample infrastructure to carry out defense-oriented research and research-related education. However, there now exists a unique opportunity in the IE department to investigate multi-agent problems that have heretofore been unexplored. By multi-agent systems, we envision a complex system in which there exist several entities that interact to solve a problem or perform a task. The agents can be cooperative or non- cooperative, and can represent humans or compute nodes. (Link)

Funded By